-

- Downloads

initial changes

Showing

- .gitignore 2 additions, 0 deletions.gitignore

- README.md 3 additions, 3 deletionsREADME.md

- maze_env.py 1 addition, 8 deletionsmaze_env.py

- run_main.py 18 additions, 20 deletionsrun_main.py

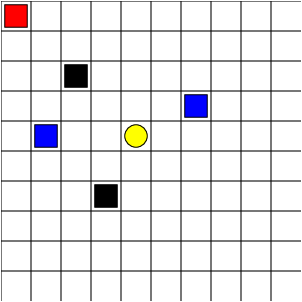

- task1.png 0 additions, 0 deletionstask1.png

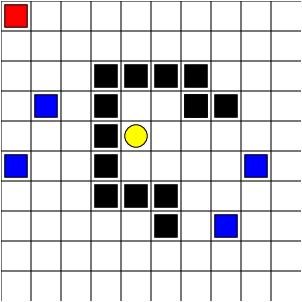

- task2.png 0 additions, 0 deletionstask2.png

- task3.png 0 additions, 0 deletionstask3.png

.gitignore

0 → 100644

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H: